Let’s take a look at the autonomous driving dilemma and who should be responsible for accidents that occur in self-driving cars.

Minority Report is Steven Spielberg’s adaptation of Philip K. Dick’s 1956 novel of the same name. It is a science fiction movie set in Washington, USA in 2054 that depicts a society with a pre-crime system that arrests criminals before they commit a murder, and depicts a chilling and bleak picture of the future. In Minority Report, Steven Spielberg envisioned a variety of high-tech gadgets that could have been in the future at the time. Interestingly, many of the most advanced technologies in the movie are slowly being implemented in the real world. Minority Report has a lot of fun scenes. One of the most interesting is when a car drives itself down the road instead of Tom Cruise, who can’t drive because he’s trying to outrun his pursuers.

At the time of the movie’s release, it was thought that self-driving cars were a long way off. However, since Google officially announced its plans to develop self-driving cars in 2010, the automotive and IT industries have been actively researching and investing in self-driving cars, and the commercialization of self-driving cars has become a reality. As self-driving cars are becoming a trend in the automotive industry, automakers are paying close attention.

However, self-driving cars are not welcomed by everyone. Some argue that self-driving cars are not reliable from a consumer perspective. In May 2016, a driver using Tesla’s self-driving feature was killed when he rear-ended a tractor-trailer passing by. The car malfunctioned when it recognized the white trailer as the sky. The U.S. government concluded that Tesla’s self-driving system, Autopilot, was not at fault and that the driver was at fault because he failed to take action to prepare for the collision while Autopilot was engaged. Tesla was cleared of liability for the first fatality in a self-driving car. But even after the first fatality, Tesla’s self-driving system was involved in 736 crashes in the U.S. over the four years starting in 2019. Autonomous systems accounted for 91% of all accidents. According to National Highway Traffic Safety Administration statistics, there were 736 crashes involving Tesla’s self-driving systems, including Autopilot and Full Self-Driving, more than previously known, the Washington Post and other news outlets reported Tuesday. Consumers are skeptical about the safety of self-driving systems. In fact, a study this year by U.S. marketing intelligence firm JD Power found that potential customers have actually increased their distrust of self-driving cars.

It’s not just consumer sentiment that has been affected by the Tesla crash. In the wake of the Tesla crash, governments have been overhauling their systems in earnest. However, there is still debate about who is responsible for self-driving car accidents, insurance coverage, legislation, and more. Let’s assume that when riding a fully autonomous vehicle, the driver only needs to set the destination and the car drives itself. In this case, the driver is not responsible for the accident because he or she is not doing any driving behavior. In this case, who is responsible for the accident? There is confusion as to who is responsible for the accident: the owner of the car, the manufacturer, or the state due to its duty of care and supervision. The insurance industry has argued that manufacturers should be considered as a new source of liability because they are in a position to control the risk of accidents. The automotive industry, on the other hand, argues that it would be excessive for manufacturers to be held 100% responsible for traffic accidents. In Germany, the autopilot feature in Tesla’s electric cars is not allowed in cars because it is still a test version that is not yet fully functional. South Korea, Japan, and Europe are discussing common operating standards for self-driving vehicles, with national adoption expected in 2018, including standards for vehicle conditions such as when a driver can overtake or change lanes without taking their hands off the wheel.

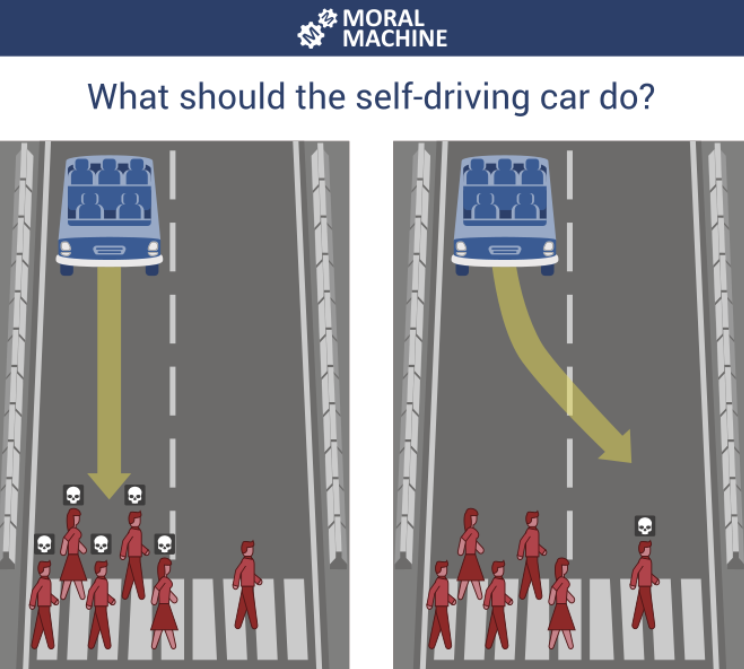

These legal issues are not the only blind spots for self-driving cars. There is also the ethical issue of the AI’s judgment when it makes unintended accidents. Take for example the thought experiment presented on the TED-ed YouTube channel. Suppose a self-driving car needs to avoid an object being thrown from a truck in front of it. It has three options, and it needs to be programmed to choose between them. First, drive straight ahead and crash into the object directly. Second, steer to the right and collide with the motorcycle. Third, steer left and collide with the SUV. In a situation like this, you have to make a choice: do you crash into the motorcycle for your own safety, do you keep going straight for the safety of others even if it means risking your life by crashing into an object, or do you crash into the SUV, which has a higher safety rating? If a human driver were behind the wheel, any outcome would be a simple reflex, not a conscious decision. But in a self-driving car, these are decisions that someone has programmed into the car. So on what basis did the programmer program such a judgment? If you think that’s too extreme, suppose the car analyzes and evaluates the passenger’s life and makes an ethical judgment based on that judgment. In fact, value judgments like this are so central to autonomous systems that there is a polling game called Moral Machine for ongoing research at MIT, which is designed to help you decide.

Moral Machine is a platform for collecting social perceptions about the ethical decisions of artificial intelligence, such as driverless cars. When a driverless car is driving, it has to make an ethical choice to sacrifice one to five occupants or pedestrians. Their social status, physical condition, age, and species (dog, cat, human) are randomized, and the survey subject must make an acceptable judgment as an outside observer. Let’s assume that we are programming self-driving cars based on these findings and social perception. Is it right to program it to save as many lives as possible in the event of an inevitable accident, or is it better to program it to save the lives of its occupants at all costs? Once we have made this value judgment and programmed it, can we say with certainty that we are not responsible for the accident when it actually happens?

In my opinion, the legal issues of self-driving cars are easily solved if there is a consensus between individuals and individuals and individuals and society, but the ethical issues are different. Ethical issues have always been raised by the rapid development of technology in modern times. The development of science and technology enriches our lives, but it often leads to violations of human ethics. These issues should be analyzed from an academic perspective to find realistic alternatives to the development of science and technology. There is no doubt that self-driving cars, like other technologies, will be a great innovation and improve our quality of life. The possibility of life-threatening car accidents such as drowsy driving, drunk driving, reckless driving, and retaliatory driving will be eliminated. Better traffic flow will reduce the time it takes to reach our destinations due to less traffic congestion, and we will be able to use our time more efficiently, such as enjoying leisure activities while traveling to our destinations without having to drive. However, self-driving cars, like any other technology, are bound to face ethical issues. I believe that the ethical issues outlined in this article are not unique to autonomous driving. These are ethical issues facing not only autonomous driving, but also artificial intelligence, robots, and even humanity as a whole. Whether human life can be weighed, whether the weight of human life is different from that of animals, and other ethical issues will have to be resolved before autonomous vehicles can be commercialized.

I’m a blog writer. I want to write articles that touch people’s hearts. I love Coca-Cola, coffee, reading and traveling. I hope you find happiness through my writing.

I’m a blog writer. I want to write articles that touch people’s hearts. I love Coca-Cola, coffee, reading and traveling. I hope you find happiness through my writing.